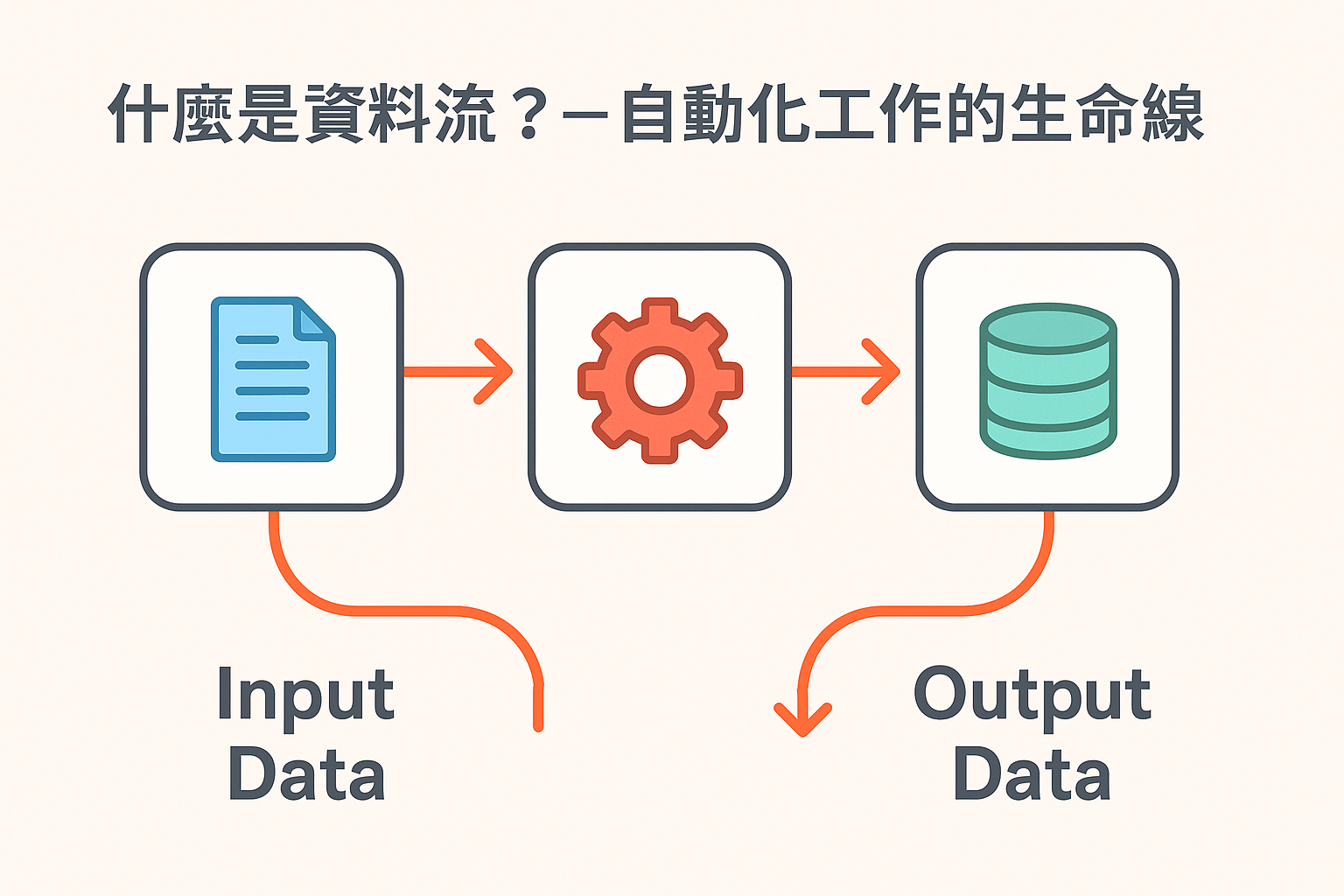

過了許久多少有些流量,這邊介紹利用這些流量建立推薦系統,主要利用同個 Session 下有被瀏覽過的文章都會建立文章間的關係,再利用先前說的 Louvain 社群發現 將文章分類。

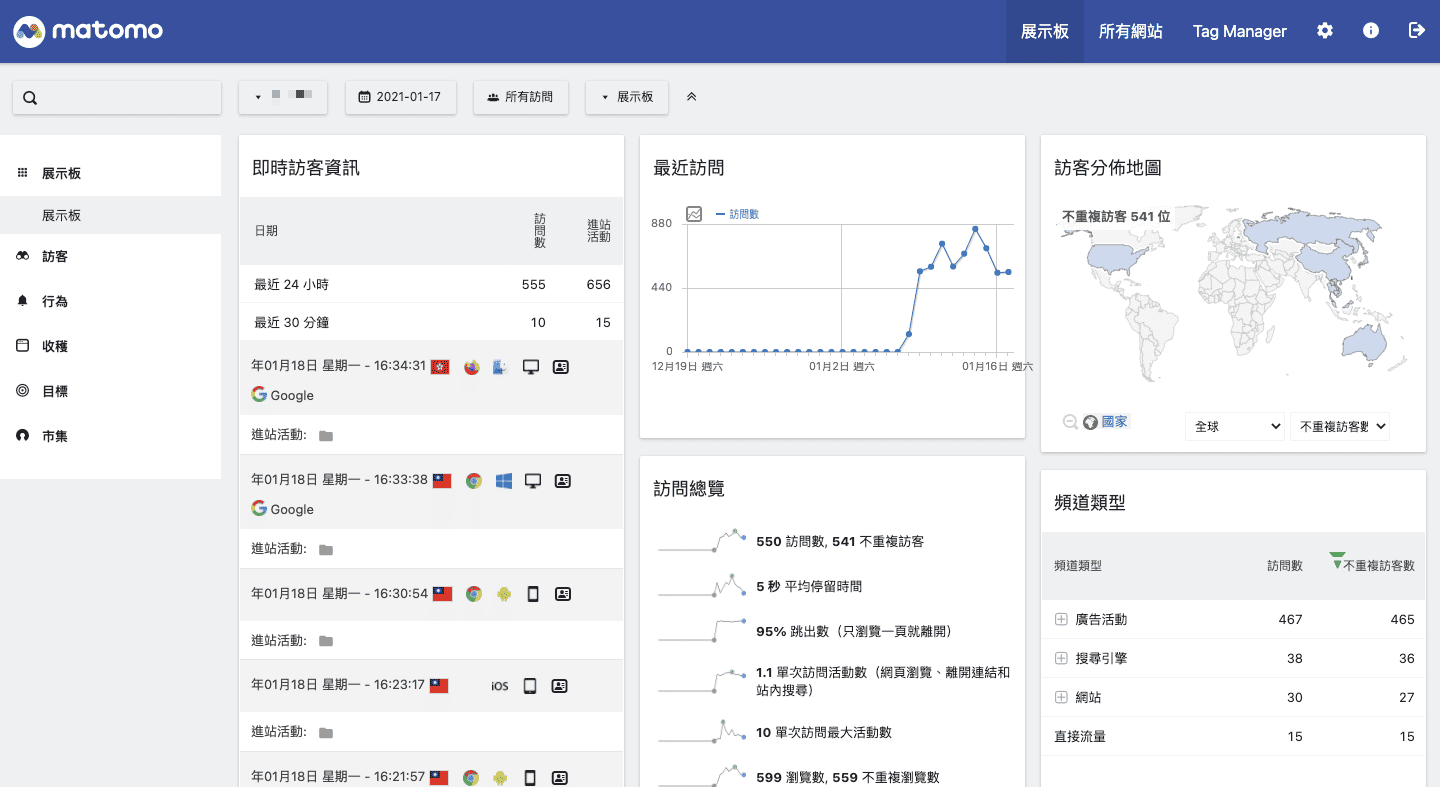

資料來源 資料來源主要為 GA 可以拿到的,不過在 SessionId 需要先行設置,可以參考 Simon 的網站。

1 2 3 4 5 6 7 8 9 10 11 12 import reimport itertoolsimport pandas as pdimport networkx as nximport matplotlib.pyplot as pltfrom community import community_louvain%matplotlib inline df = pd.read_csv('data/smiliu.csv' ) df.head()

資料整理 1 2 3 4 df['Page' ] = df['Page' ].apply(lambda x: re.search('\d+' , x)[0 ]) df['PageTitle' ] = df['PageTitle' ].apply(lambda x: x.split('|' )[0 ]) df.head()

建立文章間權重 當同個 Session 有瀏覽過多篇文章,則將文章間的權重設為文章數的倒數,最後將權重加總。

1 2 3 4 5 6 7 8 9 10 11 12 13 session_group = df.groupby('SessionId' )['Page' ].agg(list ).reset_index() session_group = session_group[session_group['Page' ].str .len () > 1 ] session_group['Page' ] = session_group['Page' ].apply(lambda x: sorted (x)) session_group['weight' ] = session_group['Page' ].apply(lambda x: 1 /len (x)) session_group['page_pair' ] = session_group['Page' ].apply(lambda x: list (itertools.combinations(iter (x), 2 ))) session_group = session_group.explode('page_pair' ) session_group[['page_1' , 'page_2' ]] = session_group['page_pair' ].apply(pd.Series) graph_data = session_group[['page_1' , 'page_2' , 'weight' ]] graph_data = graph_data.groupby(['page_1' , 'page_2' ]).agg(sum ).reset_index() graph_data.head()

Louvain 演算法分群 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 G = nx.from_pandas_edgelist(graph_data, 'page_1' , 'page_2' , ['weight' ]) partition = community_louvain.best_partition(G, resolution = 1 ) size = float (len (set (partition.values()))) pos = nx.spring_layout(G) cm = plt.cm.get_cmap('RdYlBu' , size) count = 0. print ('community: ' , size)plt.figure(1 , figsize=(8 ,8 )) for com in set (partition.values()) : count += 1. color_code = '#%02x%02x%02x' % (cm(count/size, bytes =True )[:3 ]) list_nodes = [nodes for nodes in partition.keys() if partition[nodes] == com] nx.draw_networkx_nodes(G, pos, list_nodes, node_size=30 , node_color=color_code) nx.draw_networkx_labels(G, pos, font_size=10 ) nx.draw_networkx_edges(G, pos, width=0.1 , alpha=1 ) plt.show()

分群結果 1 2 3 page_label = df.drop_duplicates(['Page' , 'PageTitle' ])[['Page' , 'PageTitle' ]] page_label['community_id' ] = page_label['Page' ].apply(lambda x: partition.get(str (x), None )) page_label.sort_values('community_id' ).dropna().reset_index(drop=True )

分群結果貌似還可以,分為 GA>M 、 GA&python 、 python ,不過還需要找時間進行驗證。